Blockchain modularity is inevitable. Right?

Over the last year, I’ve been attempting to understand blockchain scalability and how Saga fits within the evolving landscape. To ensure Saga’s success, I needed to understand how various scalability architectures worked, where these paths eventually led, and make sure we were on the optimal path. As I dissected the product differences and tradeoffs between existing appchains and rollups, I found myself chasing circular arguments and getting more confused. For anyone who also attempted to spelunk down this rabbit hole and found a giant wall of utter confusion, I completely empathize.

Everyone always asks me: how does Saga fit within the modular story? I found it pretty difficult to answer. It turns out, the reason why I couldn’t answer this simple question is that Saga doesn’t fit neatly into the current modular narrative at all. This is because the existing modular ecosystem and designs are in their infancy and still evolving.

However, by analyzing how the modular ecosystem came to be and understanding the limitations of the current modular architecture, we can predict where the modular ecosystem is headed.

As it turns out, the future of modularism looks a lot like Saga.

Beginning with the basics: Monolithic Chains

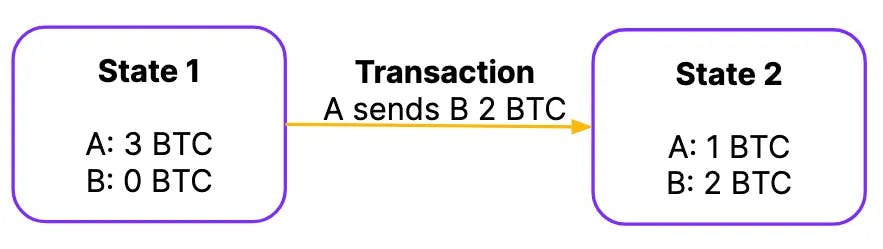

A blockchain is simply a very long list of states and transactions, guaranteed with a certain amount of security.

- State represents the data of the ledger at a specific snapshot

- Transactions trigger state transitions

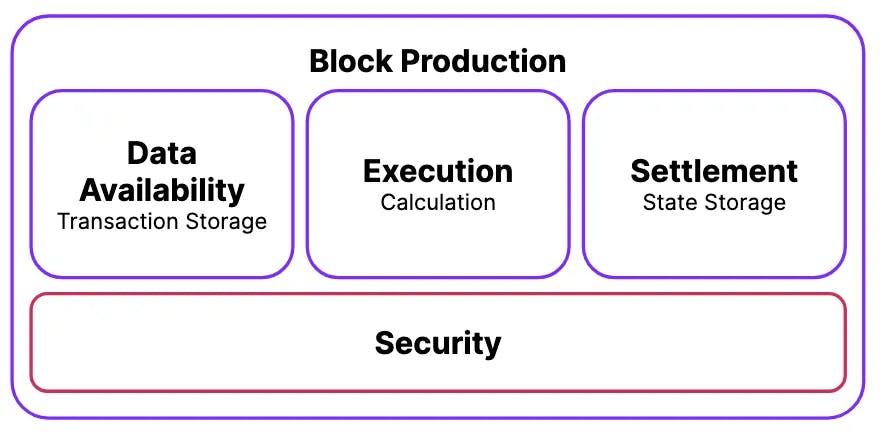

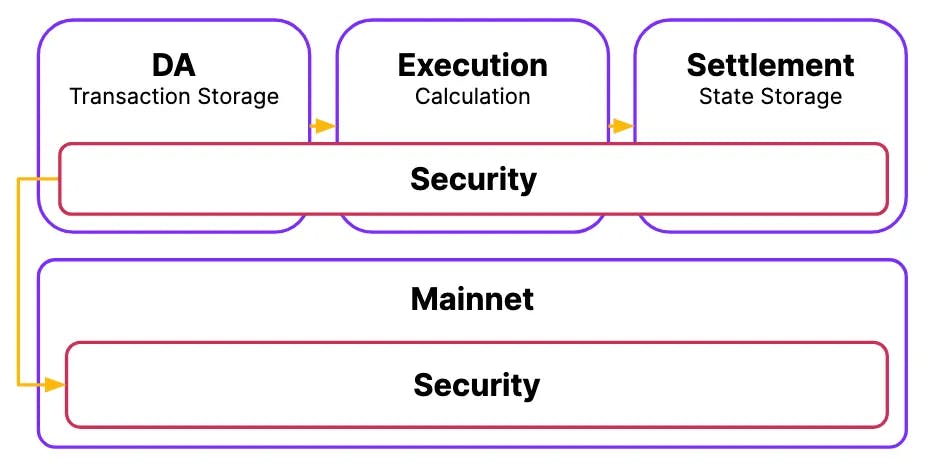

In a monolithic proof of stake blockchain, a committee of block producers (validators) update the ledger by agreeing on the list of transactions to be included in the next block AND the corresponding state resulting from those transactions. The block production mechanism can be functionally divided into three parts:

- Data Availability (DA) is just a fancy word for “transaction storage.” The DA stores a list of transactions to be included in the block.

- Execution is just a fancy word for “calculation.” Execution computes the updated state based on those transactions.

- Settlement is just a fancy word for “state storage.” Settlement stores the resulting state after the execution.

Every block, a proposer validator prepares the list of transactions (DA), executes those transactions (execution) and posts the resulting state (settlement). After a consensus step of every other validator agreeing on the produced block, the state specified in the settlement stage is anointed as the latest global state.

A potential issue with the monolithic chain is that there is a theoretical limit to the amount of transactions the chain can process. While execution can be somewhat parallelized, any transaction that updates the same state needs to be processed sequentially. This is akin to the theoretical limits of CPU hyperthreading vs. multicore computation. Just like how CPUs eventually all became multicore, the monolithic chain needs to go modular to untap true scalability.

Step 1: Modularizing Execution

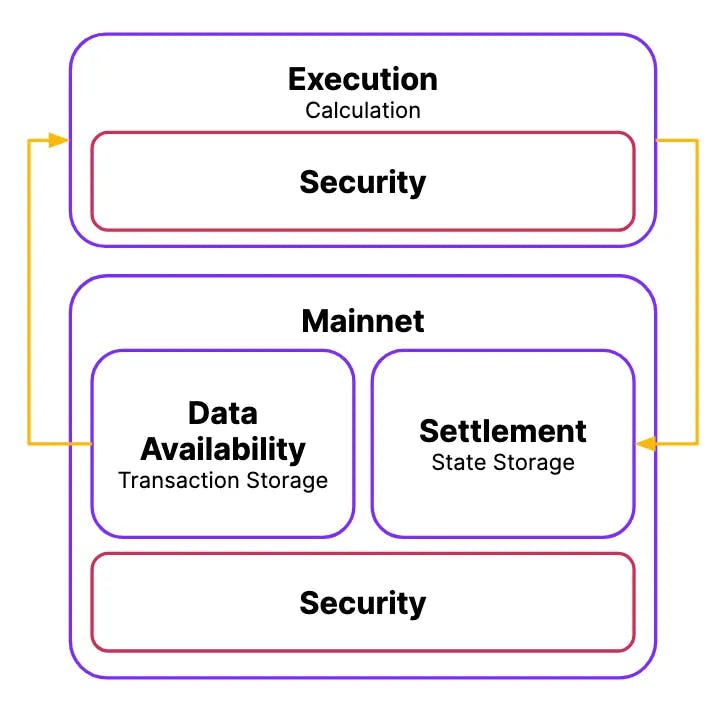

In the block production mechanism, the most computationally heavy component is the execution stage. So one scaling strategy is to offload the execution from the base chain. In this design, the mainnet simply becomes a place to store the transaction and latest state from the off-chain execution environment.

How do you guarantee that the off-chain execution is submitting valid state to the main chain? We need to inject some amount of security into the execution environment. There are broadly two different methods.

The first method is to enforce correctness computationally. When the execution environment submits the state to the mainnet, it is also required to submit a validity proof that mathematically proves that the state is valid. This method is broadly called “zero knowledge” or ZK.

The second method is to enforce via committee. A committee (like a set of validators or node operators) attests to the accuracy of the execution. We can either implement a full proof of stake consensus on the execution environment (decentralized sequencers), or we can choose to optimistically trust a group of auditors with a challenge period.

Once the execution environment is properly secured, you can replicate this process to scale your mainnet by launching many parallel execution environments. These are the L2s, such as Optimism, Arbitrum, etc.

However, this eventually also runs into scalability issues. The mainnet’s computational load is still limited, and eventually just storing the transactions, storing the state and verifying the correctness of that state will use up the entirety of the mainnet’s computation budget.

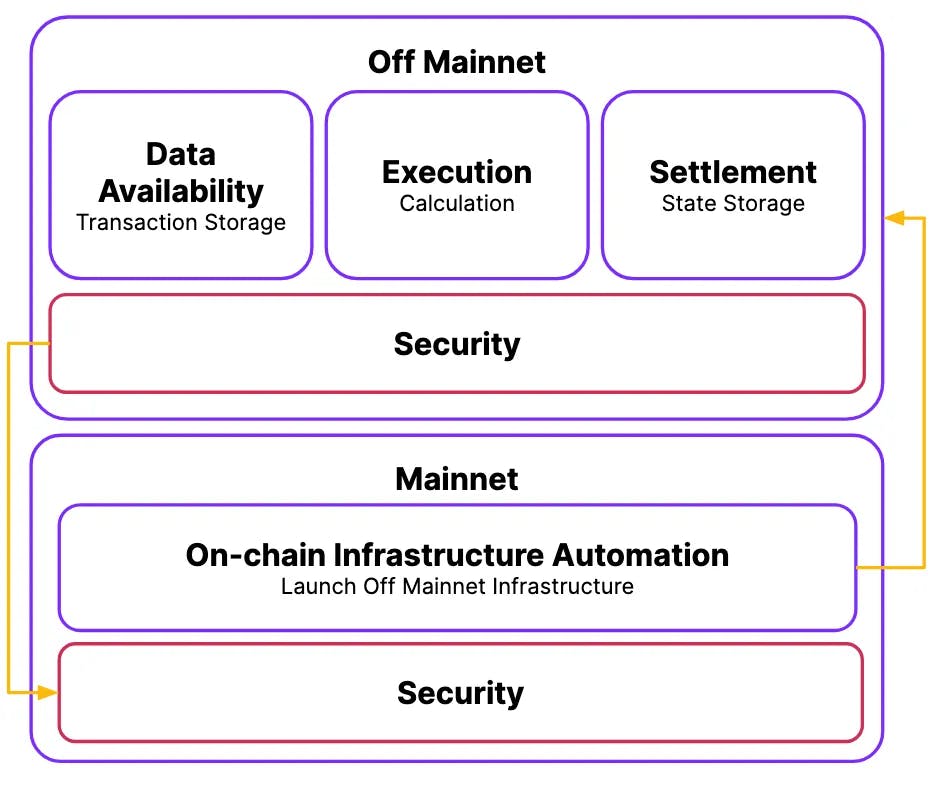

Step 2: Modularizing Data Availability

For this reason, the next component we have seen the ecosystem modularize is data availability. By offloading the transaction storage task off of the mainnet, we can hyperspecialize the mainnet to just focus on state storage and verification. This way, we can spin up even larger amounts of parallel execution environments.

These off-chain DA providers are projects such as Celestia, EigenDA and Avail. However, just like the execution environment, the DA requires some form of security to guarantee that the transaction storage is trustworthy. In the case of Celestia, this is derived from their proof of stake system. With EigenDA, this is derived from restaked Ethereum.

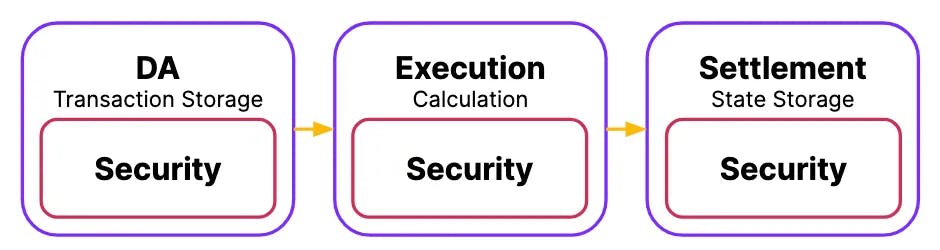

This is the modular landscape as of today. However, this current architecture has some key issues.

Bottlenecks with Today’s Modular Architecture

While this current modular architecture is an improvement in scalability, it is nowhere near enough to support mainstream use cases. Supporting the global value infrastructure will require many thousands of execution environments. Standing up security for even one execution environment is operationally taxing and hugely expensive. Standing up thousands is a non-starter.

Similarly, to support the thousands of execution chains worth of traffic, a single DA and settlement layer is not enough; many parallel DA and settlement layers need to be provisioned and secured. However, launching and securing any new chain requires a huge amount of work and resources.To make a truly scalable architecture, we need to automate this process.

Next Step: Modularizing Security

The most difficult part of any decentralized infrastructure is provisioning the security. A truly scalable and elastic architecture is only possible if we can separate out the security from the block production modules and delegate that task to a separate dedicated chain (let’s call that the mainnet now).

How do we modularize the security?

Typically, a malicious activity in a chain will punish (slash) the validator responsible for the malicious action on its own chain chain. The interesting thing about proof of stake is that the security of the chain is self-referential. Correct block production happens due to the underlying security (value at stake). In other words, validators continue to produce correct blocks because of the risk of losing their assets. However, the underlying security is enforced by correct block production: the record of the validators’ assets are stored in the ledger itself.

We can use Cross Chain Validation (CCV) to modularize this security off the chain. CCV is a Cosmos innovation similar to Eigenlayer’s re-staking that allows chains to borrow security from another chain. With CCV, any malicious activity in the DA and execution chain is relayed back to the mainnet where the slashing is enforced. This way, the modules automatically inherit the full security of the mainnet.

In this model, the mainnet’s only responsibility is to acquire security and share security to the various off-mainnet block production modules. In addition to scalability, unifying the security for the entire block production cycle in this fashion is a safer architecture. In the current modular world, the amount of security for a given transaction is probabilistic, depending on where in the cycle of block production it is in. Probabilistic security leads to many issues in bridging and user experience.

The Inevitability of Integration

The concept of modularity was not invented in Web3. In fact, the cycle of specialization and integration have driven many innovation cycles in the past. In the early PC days, the CPU was a generalized solution for all compute. Eventually, new use cases necessitated additional specialized modules external to the CPU. For example, graphical use cases were pushed into the GPU, audio into sound cards and network workloads into the network cards. Each of these specialized modules eventually went through many improvement iterations where the module standardized and commoditized.

Once these modularized solutions are sufficiently optimized and standardized, it is often more efficient to integrate it back into a single package. Most processors and SoCs found today are integrated products that handle some combination of general, graphical, audio and network compute.

Similarly, many parts of the modular blockchain architecture will be integrated for increased efficiency.

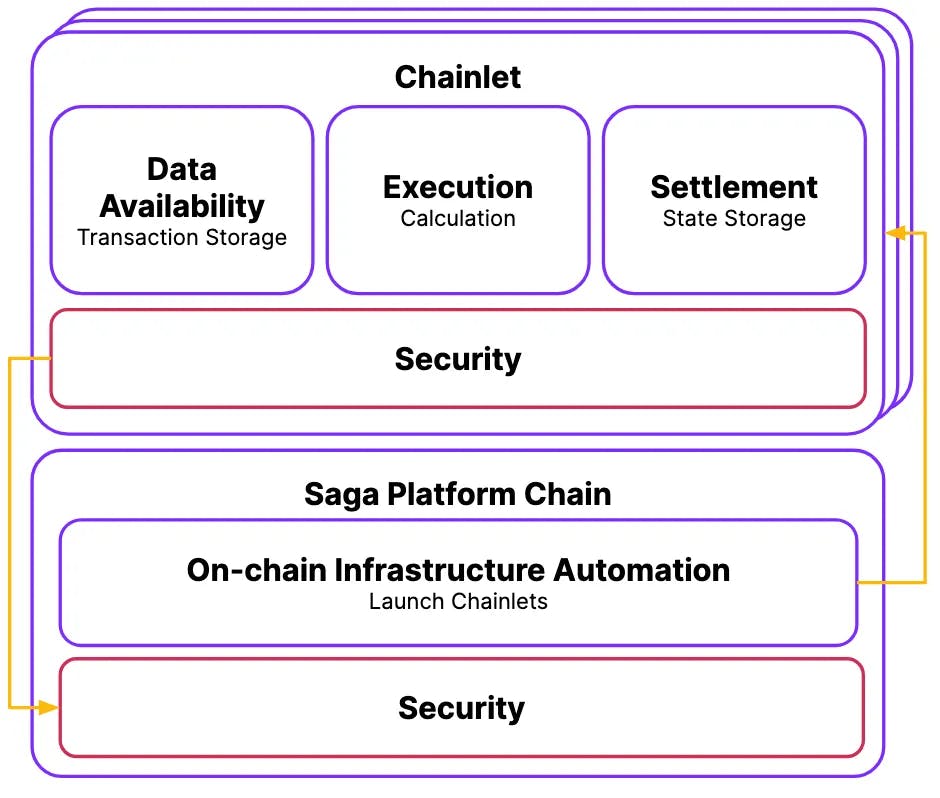

Next Step: Integrating Block Production and Automating Scalability

There’s a funny thing that happens once you move all the block production components off of the mainnet. Just like how modern processors have integrated the various computing modules back into a single SoC for improved efficiency, future blockchain architectures will integrate the block production modules into a single module. The only reason all these block production modules were split to begin with was to offboard the execution from the mainnet. With an integrated security architecture, it makes little sense to keep them separate. We expect the off-mainnet DA, execution and settlement logic to integrate back into a single component.

In fact, instead of having the mainnet just maintain and share security, we can introduce a new function onto the mainnet to automate the launching of the off-mainnet components. By modularizing the entire block production modules off the mainnet and introducing the ability to automatically launch these off-mainnet modules, we can build an infinitely scalable architecture.

In fact, this is exactly Saga’s architecture. We call our mainnet the Saga Platform Chain and our off-mainnet block production component a Chainlet.

Conclusion

Is blockchain modularity inevitable? Most certainly so. In this article, we have investigated the motivations and paths in the blockchain ecosystem that have led us to this modular architecture. We then outlined the current limitations of the modular architecture as it stands today. Finally, we explored what’s next with modularism. With modularized security and reintegration of the block production cycle, we can visibly observe the bleeding edge converge to the Saga design.

Witness the inevitable state of modularism happening today at Saga.